A few weeks ago I created a demo for a Spatialized VR Audio Mixer. This is essentially a way to give pieces of audio locations in 3D space and a simulation for what their playback would sound like on a Large Speaker Array…But a video is worth 30,000 words per second, so check it out:

Background

When I was 13, I got an amp with a 4-channel mixer on it. One of the first things I did was take every speaker in my parent’s house and hook it up to this mixer. I created a perimeter of speakers, with some above me. I think this was the first song I listened to:

https://www.youtube.com/watch?v=h8IuFl3sMhk

My lab days at Tome have a long history of weird audio projects, including a few Shepard Tone generators and a tool to plot the mandelbrot set in a field of sound (It sounded Okay). In May of this year, I went to Moogfest in Durham, North Carolina. There was a group of researchers there from Virginia Tech with a large speaker array called the Cube, an array of over 150 speakers arranged in a cube-shaped, four-story building. A few people at The Cube helped me to get a basic view of the current technology used in HDLAs, including Reaper, Max, and various Ambisonics toolkits. You can find some interesting technical information on the cube here.

The Idea

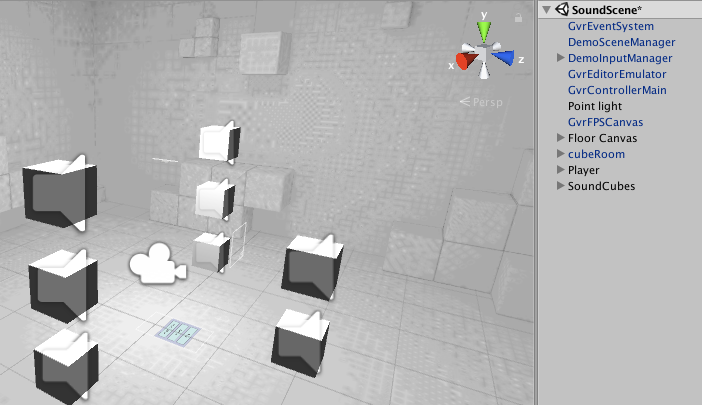

My idea was essentially just to create a virtual sphere within which sounds can be moved and manipulated. I chose to use Unity and Google Cardboard for this project. They were both perfect for the idea because they are simple and easy, and prototyping is time-consuming. I would have loved to develop this on an Oculus Rift or HTC Vive, but those would have taken far too much time for something that I didn’t even really expect to work this well.

The Code

This was the least code I’ve ever written on a lab day. Setting it up couldn’t have been simpler. I used a Cardboard demo, the one where there is a cube in front of you and you can pick it up and drag it. I also used 8 separate audio tracks, from a dinky little song I wrote a few days prior.

- Start out by installing the Google VR SDK here. Follow the instruction for setting up a project with Android or iOS. I chose Android

- Open up the GVRDemo.unity scene. Mine was in /Assets/GoogleVR/Demos/Scenes/

- Add this function to a new script called Sound.cs

public void Reset() {

audio.Stop();

audio.Play();

}- Add a pointer down event to the cube prefab and select Teleport.DragStart for its function. Make a pointer up event and select Teleport.DragEnd

- Add an AudioSource object to it and add select a sound file. Disable Loop and set Spatial Blend to 3D

- Attach the Sound script to the cube

- Find the ResetButton object and add a new OnClick event attached to your audio source that calls Sound.Reset

- Make as many copies of the cube as you have tracks and put them wherever you want them to start in the player’s field of hearing. Note that sounds that don’t start immediately need to be exported with empty space at the beginning so that you don’t have to set the timing of tracks in Unity.

The Results

The whole thing worked better than I expected. In fact, it feels so natural that some effects aren’t obvious at first. I realized this once I tested on my computer instead of on my phone: Moving the ‘view’ without actually moving your head makes the ambisonic effects much more obvious.

Potential next steps:

- Make the number and initial position of cubes variable

- Use OSC with REAPER to control positions and record automation of positions

- Add visualization to the cubes to help users know when a cube is playing

- Add controls to the cube to set sound file, volume, and possibly other parameters in real-time

- Convert the app from cardboard to something simpler (Since depth perception isn’t very useful in this and we could just use the phone normally with rotation tracking)